The independent body that reviews how the owner of Facebook moderates online content has said the firm should label fake posts rather than remove them.

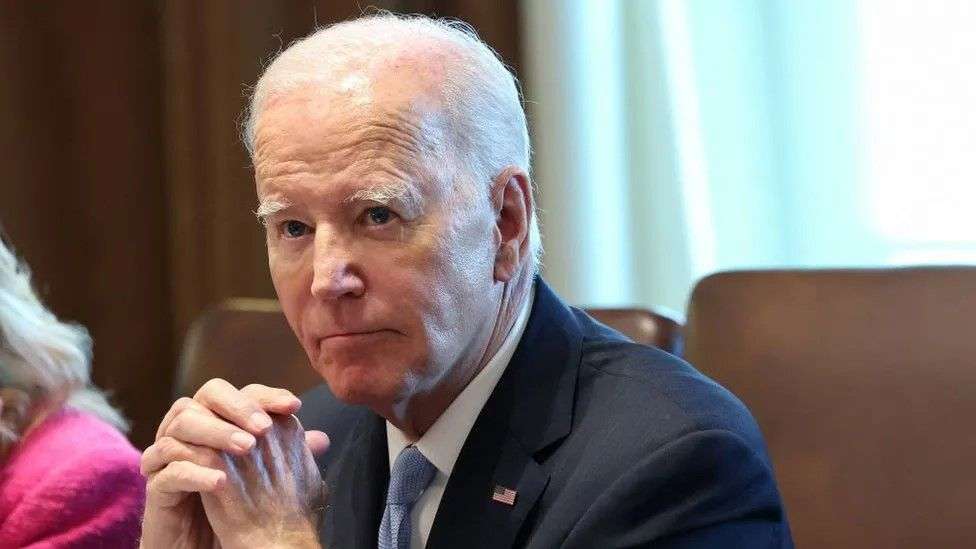

The Oversight Board said Meta was right not to remove a fake video of US President Joe Biden because it did not violate its manipulated media policy.

But it said the policy was "incoherent" and should be widened beyond its scope ahead of a busy election year.

A Meta spokesperson told the OceanNewsUK it was "reviewing" the guidance.

"[We] will respond publicly to their recommendations within 60 days in accordance with the bylaws," Meta said.

The Oversight Board called for more labelling on fake material on Facebook, particularly if it cannot be removed under a certain policy violation.

It said this could reduce reliance on third-party fact checkers, offer a "more scalable way" to enforce its manipulated media policy and inform users about fake or altered content.

It added it was concerned about users potentially not being informed if or why content had been demoted or removed or how to appeal any such decisions.

In 2021 - its first year of accepting appeals - Meta's board heard more than a million appeals over posts removed from Facebook and Instagram.

'Makes little sense'

The video in question edited existing footage of the US President with his granddaughter to make it appear as though he was touching her inappropriately.

Because it was not manipulated using artificial intelligence, and depicted Mr Biden behaving in a way he did not, rather than saying something he did not, it did not violate Meta's manipulated media policy - and was not removed.

Michael McConnell, co-chair of the Oversight Board, said the policy in its current form "makes little sense".

"It bans altered videos that show people saying things they do not say, but does not prohibit posts depicting an individual doing something they did not do," he said.

He added that its sole focus on video, and only those created or altered using AI, "lets other fake content off the hook" - identifying fake audio as "one of the most potent forms" of electoral disinformation.

Audio deep fakes, often created using generative AI tools which can clone or manipulate someone's voice to suggest they said things they have not, appear to be on the rise.

In January a fake robocall claiming to be from President Biden, believed to be artificially generated, urged voters to skip a primary election in New Hampshire.

"The volume of misleading content is rising, and the quality of tools to create it is rapidly increasing," Mr McConnell said.

"At the same time, political speech must be unwaveringly protected. This sometimes includes claims that are disputed and even false, but not demonstrably harmful," he added.

'Cheap fakes'

Sam Gregory, executive director of human rights organisation Witness, said the platform should have an adaptive policy that addresses so-called "cheap fakes" as well as AI-generated or altered material, but this should not be overly restrictive and risk removing satirical or AI-altered content which is not designed to be misleading.

"One strength of Meta's existing manipulated media policy was its evaluation, which was based on whether it would 'mislead an average person'," he said.

The Oversight Board said it was "obvious" the clip of President Biden had been altered and so it was unlikely to mislead average users.

"Since the quality of AI deception and the ways you can do it keeps improving and shifting this is an important element to keep the policy dynamic as AI and usage gets more pervasive or more deceptive, or people get more accustomed to it," Mr Gregory said.

He added focusing on labelling fake posts would be an effective solution for some content, such as videos which have been recycled or recirculated from a previous event, but he was sceptical about the effectiveness of automatically labelling content manipulated using emerging AI tools.

"Explaining manipulation requires contextual knowledge," he said.

"Countries in the Global Majority world will be disadvantaged both by poor-quality automated labelling of content and lack of resourcing to trust and safety and content moderation teams and independent journalism and fact-checking."